In-Depth Guide to Data Pipeline Security for Data Engineers

Data pipeline security is crucial for maintaining the confidentiality, integrity, and availability of data. This in-depth guide explores various security measures, best practices, and tools that data engineers can use to build secure and reliable data pipelines.

Securing Data at Rest

Data at rest refers to data that is stored in databases, file systems, or other storage media. Ensuring the security of data at rest involves protecting it from unauthorized access, modification, or theft. Key measures for securing data at rest include:

- Encryption

- Access controls

- Secure storage solutions

Encryption

Encrypting data at rest ensures that only authorized users can access the data. Common encryption methods include:

- Transparent Data Encryption (TDE): Automatically encrypts data before it is stored and decrypts it when accessed by authorized users.

- File-level encryption: Encrypts individual files using encryption algorithms such as AES or RSA.

- Disk encryption: Encrypts entire storage volumes, including all files and partitions.

Access Controls

Implementing access controls ensures that only authorized users can access data at rest. Common access control mechanisms include:

- Role-Based Access Control (RBAC): Assigns roles to users, each with specific permissions to access and modify data.

- Attribute-Based Access Control (ABAC): Grants access based on user attributes, such as job title or department, and resource attributes, such as data sensitivity.

- Access Control Lists (ACLs): Specifies which users or groups of users have access to specific data resources.

Secure Storage Solutions

Secure storage solutions can help protect data at rest from unauthorized access or tampering. Some examples include:

- Hardware Security Modules (HSMs): Dedicated hardware devices that provide secure storage and cryptographic processing for sensitive data.

- Cloud-based storage with built-in encryption and access controls, such as Amazon S3 or Google Cloud Storage.

- Encrypted databases, such as Azure SQL Database or Amazon RDS with TDE enabled.

Securing Data in Transit

Data in transit refers to data that is transmitted between systems, such as between a client and a server, or between two components of a data pipeline. Ensuring the security of data in transit involves protecting it from unauthorized access, modification, or interception. Key measures for securing data in transit include:

- a. Encryption

- Authentication

- Secure communication protocols

Encryption

Encrypting data in transit ensures that only authorized users can access the data while it is being transmitted. Common encryption methods include:

- Transport Layer Security (TLS): A widely used protocol for encrypting data transmitted over a network.

- Secure Shell (SSH): A protocol for securely accessing and managing remote systems, often used for transferring data between servers.

- Virtual Private Networks (VPNs): Encrypts all network traffic between a device and a remote network, ensuring the confidentiality of data in transit.

Authentication

Implementing authentication ensures that only authorized users can access data in transit. Common authentication mechanisms include:

- Public Key Infrastructure (PKI): A framework for managing digital certificates and public-key encryption, enabling secure authentication and communication between parties.

- OAuth 2.0: An authorization framework that allows third-party applications to obtain limited access to an HTTP service.

- Mutual TLS authentication: A protocol that requires both the client and server to authenticate each other using TLS certificates.

Secure Communication Protocols

Using secure communication protocols helps protect data in transit from unauthorized access or tampering. Some examples include:

- HTTPS: An extension of HTTP that uses TLS to encrypt data transmitted over the web.

- Secure File Transfer Protocol (SFTP): A secure version of FTP that uses SSH for data encryption and authentication.

- Internet Message Access Protocol over TLS (IMAPS) and Simple Mail Transfer Protocol over TLS (SMTPS): Secure versions of IMAP and SMTP that use TLS to encrypt email data in transit.

Data Pipeline Security Best Practices

Data Pipeline Design

- Least Privilege Principle: Ensure that users and applications have the minimum permissions necessary to perform their tasks.

- Defense-in-Depth: Implement multiple layers of security controls to protect data throughout the pipeline.

- Segregation of Duties: Separate responsibilities for different stages of the data pipeline to prevent unauthorized access and reduce the risk of errors.

Data Processing

- Input Validation: Validate all input data to ensure it conforms to expected formats and does not contain malicious content.

- Data Sanitization: Remove or modify sensitive data before it is processed, stored, or transmitted.

- Secure Coding Practices: Implement secure coding practices, such as avoiding hard-coded credentials and using parameterized queries to prevent SQL injection attacks.

Monitoring and Auditing

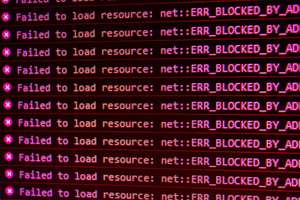

- Continuous Monitoring: Monitor the data pipeline for potential security threats, vulnerabilities, and incidents.

- Logging: Record all activities and events within the data pipeline for security, compliance, and troubleshooting purposes.

- Auditing: Regularly audit the data pipeline to ensure compliance with security policies and regulations.

Incident Response

- Incident Detection: Detect and analyze security incidents in real-time using tools such as intrusion detection systems (IDS) and security information and event management (SIEM) solutions.

- Incident Response Plan: Develop and maintain a comprehensive incident response plan to effectively handle security incidents.

- Incident Recovery: Restore the affected systems and data to their normal state after a security incident, and implement measures to prevent future incidents.

Data Pipeline Security Tools and Technologies

Encryption and Key Management

- AWS Key Management Service (KMS): A managed service for creating, managing, and using encryption keys.

- Google Cloud Key Management Service (KMS): A cloud-based service for managing encryption keys and cryptographic operations.

- HashiCorp Vault: An open-source tool for managing secrets and protecting sensitive data.

Access Control and Authentication

- Apache Ranger: An open-source tool for centralized access control and security policy management in Hadoop environments.

- Open Policy Agent (OPA): A general-purpose policy engine for fine-grained access control across the entire data pipeline stack.

- Okta: A cloud-based identity and access management platform for securing user access to data and applications.

Monitoring and Auditing

- Elastic Stack (ELK): A suite of open-source tools for centralized logging, monitoring, and analytics.

- Splunk: A platform for real-time data analysis, monitoring, and security incident detection.

- AWS CloudTrail: A service for auditing AWS account activity and API usage.

In summary, data pipeline security is a critical aspect of data engineering that ensures the confidentiality, integrity, and availability of data. By implementing robust encryption, authentication, authorization, data masking, monitoring, auditing, and incident response measures, data engineers can build secure and reliable data pipelines. This comprehensive guide provides a solid foundation and practical examples to help data engineers get started with implementing data pipeline security best practices.