Data Pipeline Security: A Comprehensive Guide for Data Engineers with Code Examples

Data pipeline security is a critical aspect of data engineering, as it ensures the integrity, confidentiality, and availability of data during processing and transmission. This comprehensive guide continues to cover various data pipeline security practices, along with code examples in Python, to help data engineers build secure and reliable data pipelines.

Data Pipeline Security Overview

Data pipeline security involves implementing various measures to protect data from unauthorized access, modification, or leakage. Key aspects of data pipeline security include:

- Data encryption

- Authentication and authorization

- Data masking and anonymization

- Auditing and logging

- Security monitoring

Data Encryption

Data encryption involves encoding data in such a way that only authorized parties can access it. Encryption is essential for protecting sensitive data during transmission and storage. Common encryption techniques include:

- Symmetric encryption (e.g., AES)

- Asymmetric encryption (e.g., RSA)

- Transport Layer Security (TLS)

Example: Using Python and the cryptography library to encrypt and decrypt data

from cryptography.fernet import Fernet# Generate a symmetric encryption keykey = Fernet.generate_key()# Create a Fernet object with the generated keycipher_suite = Fernet(key)# Encrypt dataplaintext = b"My sensitive data"ciphertext = cipher_suite.encrypt(plaintext)# Decrypt datadecrypted_text = cipher_suite.decrypt(ciphertext)assert plaintext == decrypted_textprint(plaintext)print(decrypted_text)

Authentication and Authorization

Authentication and authorization involve verifying the identity of users and determining their access rights to data and resources. Implementing strong authentication and fine-grained authorization controls can help protect your data pipelines from unauthorized access. Some common methods include:

- Username/password authentication

- API keys or tokens

- Role-based access control (RBAC)

Example: Using Python and Flask to implement authentication and authorization in a simple API

from flask import Flask, request, jsonifyfrom functools import wrapsapp = Flask(__name__)# Simple authentication functiondef authenticate(username, password):# Replace with your own authentication logicreturn username == "admin" and password == "secret"# Authentication decoratordef requires_auth(f):@wraps(f)def decorated(*args, **kwargs):auth = request.authorizationif not auth or not authenticate(auth.username, auth.password):return jsonify({"message": "Unauthorized"}), 401return f(*args, **kwargs)return decorated# Example API route@app.route("/api/data", methods=["GET"])@requires_authdef get_data():# Replace with your own data retrieval logicdata = {"data": "sensitive data"}return jsonify(data)if __name__ == "__main__":app.run(debug=True)

- Data Masking and Anonymization

Data masking and anonymization involve transforming sensitive data to protect it from unauthorized access, while still preserving its usability for analysis or testing. Some common techniques include:

- Tokenization

- Generalization

- K-anonymity

Example: Using Python and pandas to mask sensitive data

first_name,last_name,ssn,emailAlice,Smith,123-45-6789,alice.smith@email.comBob,Johnson,234-56-7890,bob.johnson@email.comCharlie,Williams,345-67-8901,charlie.williams@email.comDiana,Brown,456-78-9012,diana.brown@email.comEva,Davis,567-89-0123,eva.davis@email.com

import pandas as pd# Function to mask the last four digits of SSNdef mask_ssn(ssn):return ssn[:-4] + 'XXXX'# Load data from CSV filedata = pd.read_csv('raw_data.csv')# Mask sensitive data (e.g., replace the last four digits of the SSN with 'XXXX')data['ssn'] = data['ssn'].apply(mask_ssn)# Save masked data to a new CSV filedata.to_csv('masked_data.csv', index=False)

- Auditing and Logging

Auditing and logging involve recording activities and events within the data pipeline for security, compliance, and troubleshooting purposes. Maintaining detailed logs and implementing regular audits can help you identify potential security issues and ensure the integrity of your data pipelines. Some best practices include:

- Log all data access and modification events

- Use a centralized logging system (e.g., ELK stack)

- Implement log rotation and retention policies

Example: Using Python and the logging library to log data pipeline events

import logging# Configure logging settingslogging.basicConfig(filename='data_pipeline.log', level=logging.INFO,format='%(asctime)s %(levelname)s: %(message)s', datefmt='%Y-%m-%d %H:%M:%S')# Log an info messagelogging.info('Data pipeline started')# Log a warning messagelogging.warning('Missing data in input file')# Log an error messagelogging.error('Data pipeline failed due to an error')

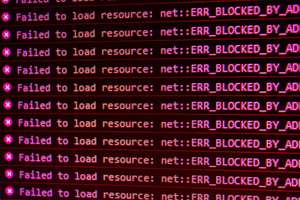

- Security Monitoring

Security monitoring involves continuously monitoring the data pipeline for potential security threats, vulnerabilities, and incidents. Implementing a robust security monitoring system can help you detect and respond to security events in a timely manner. Some best practices include:

- Use intrusion detection systems (IDS) and intrusion prevention systems (IPS)

- Monitor network traffic for unusual patterns

- Set up alerts for security incidents

Example: Using Python and the Watchdog library to monitor file changes in a data directory

import sysimport timefrom watchdog.observers import Observerfrom watchdog.events import FileSystemEventHandlerclass DataDirectoryHandler(FileSystemEventHandler):def on_modified(self, event):print(f'File modified: {event.src_path}')def on_created(self, event):print(f'File created: {event.src_path}')def on_deleted(self, event):print(f'File deleted: {event.src_path}')if __name__ == "__main__":path = sys.argv[1] if len(sys.argv) > 1 else '.'event_handler = DataDirectoryHandler()observer = Observer()observer.schedule(event_handler, path, recursive=True)observer.start()try:while True:time.sleep(1)except KeyboardInterrupt:observer.stop()observer.join()

In short, data pipeline security is a crucial aspect of data engineering that ensures the confidentiality, integrity, and availability of data. By implementing robust encryption, authentication, authorization, data masking, auditing, logging, and security monitoring measures, data engineers can build secure and reliable data pipelines. This comprehensive guide provides a solid foundation and practical code examples to help you get started with implementing data pipeline security best practices.