Data Pipeline Monitoring: Tools and Techniques for Ensuring Reliability

Data pipeline monitoring is an essential practice for ensuring the reliability, performance, and accuracy of data pipelines. By continuously monitoring data pipelines, data engineers can identify and resolve issues before they impact downstream applications and users. In this article, we will explore various tools and techniques for data pipeline monitoring, covering topics such as metrics collection, log analysis, data validation, and alerting.

Data pipeline monitoring is crucial for several reasons:

- Reliability: Monitoring helps ensure that your data pipelines are running as expected, without errors or failures that could impact downstream applications and users.

- Performance: By monitoring key performance metrics, you can identify and resolve bottlenecks and inefficiencies in your data pipelines, ensuring optimal performance.

- Data quality: Monitoring allows you to detect and correct data quality issues, such as missing, incorrect, or duplicate data, before they propagate through your data pipelines.

- Compliance: In regulated industries, monitoring can help ensure compliance with data security, privacy, and retention requirements.

- Continuous improvement: By regularly monitoring your data pipelines, you can identify opportunities for optimization and improvement, driving ongoing innovation and growth.

Metrics Collection

Collecting and analyzing metrics is a fundamental aspect of data pipeline monitoring. Some key metrics to monitor include:

- Task execution times: Monitor the time taken to execute individual tasks or stages in your data pipelines, helping you identify bottlenecks and optimize performance.

- Success rates: Track the success rates of your data pipeline tasks, alerting you to potential issues and failures.

- Resource usage: Monitor the utilization of resources such as CPU, memory, and disk space, helping you ensure that your data pipelines are running efficiently and within capacity constraints.

- Data volumes: Track the volume of data processed by your data pipelines, allowing you to detect and resolve issues related to data ingestion, processing, or storage.

There are various tools and techniques available for collecting and analyzing metrics from your data pipelines, such as:

- Custom instrumentation: Add custom code to your data pipelines to collect and report metrics, using libraries such as StatsD, Prometheus, or OpenTelemetry.

- Built-in monitoring tools: Use the monitoring tools and features provided by your data pipeline platform or framework, such as Apache Airflow's built-in metrics, AWS Glue's monitoring features, or Azure Data Factory's monitoring capabilities.

- Third-party monitoring tools: Leverage third-party monitoring tools, such as Datadog, New Relic, or Grafana, to collect, store, and analyze metrics from your data pipelines.

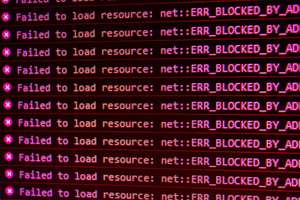

Log Analysis

Analyzing logs generated by your data pipelines is another critical aspect of data pipeline monitoring. Logs can provide valuable insights into the internal workings of your data pipelines, helping you identify and resolve issues related to performance, errors, and data quality.

Some best practices for log analysis include:

- Centralize your logs: Collect logs from all your data pipeline components in a centralized location, making it easier to search, analyze, and correlate log data.

- Use log aggregation tools: Leverage log aggregation tools, such as Logstash, Fluentd, or Amazon Kinesis Data Firehose, to collect, process, and store log data from your data pipelines.

- Implement log analysis and visualization tools: Use log analysis and visualization tools, such as Elasticsearch, Logz.io, or Splunk, to search, analyze, and visualize log data, enabling you to identify patterns and trends in your data pipelines.

- Set up log alerts: Configure alerts based on log data, notifying you of potential issues or anomalies in your data pipelines.

Data Validation

Validating the data flowing through your data pipelines is essential for ensuring data quality and preventing data corruption. Some techniques for data validation include:

- Schema validation: Use schema validation to ensure that your data conforms to a predefined structure, catching issues such as missing, extra, or incorrectly formatted fields.

- Range checks: Implement range checks to ensure that your data falls within acceptable bounds, preventing issues such as outliers or invalid values.

- Consistency checks: Conduct consistency checks to ensure that your data is logically consistent, such as verifying that foreign key relationships are maintained or that calculated fields are accurate.

- Data profiling: Use data profiling techniques, such as summary statistics, histograms, or frequency analysis, to gain insights into your data and identify potential data quality issues.

- Data lineage tracking: Track the lineage of your data, including its source, transformations, and destination, enabling you to identify and resolve issues related to data provenance and accuracy.

There are various tools and libraries available for implementing data validation in your data pipelines, such as Apache Nifi, Great Expectations, or Deequ.

Alerting

Setting up alerts is crucial for ensuring that you are notified of potential issues or anomalies in your data pipelines before they impact downstream applications and users. Some best practices for alerting include:

- Define meaningful alert thresholds: Set alert thresholds based on your data pipeline's performance and error tolerances, ensuring that you are notified of potential issues without being overwhelmed by false alarms.

- Use multiple alert channels: Leverage multiple alert channels, such as email, SMS, or instant messaging, to ensure that you receive notifications in a timely manner and can take appropriate action.

- Integrate with incident management tools: Integrate your alerting system with incident management tools, such as PagerDuty, Opsgenie, or VictorOps, to streamline incident response and resolution.

- Automate remediation actions: Where possible, automate remediation actions, such as restarting failed tasks or rolling back to a previous version of your data pipeline, to minimize the impact of issues and reduce manual intervention.

Monitoring Tools for Data Pipelines

There is a wide range of monitoring tools available to help you monitor and manage your data pipelines. Some popular options include:

- Apache Airflow: A popular open-source data pipeline platform with built-in monitoring features, such as task execution logs, metrics collection, and a web-based dashboard.

- AWS Glue: A fully managed ETL service from Amazon Web Services, which includes monitoring features like AWS CloudWatch integration, job logs, and data quality checks.

- Azure Data Factory: A cloud-based data integration service from Microsoft, offering monitoring capabilities like performance metrics, log analysis, and integration with Azure Monitor.

- Google Cloud Dataflow: A fully managed data processing service from Google Cloud, providing monitoring features such as Stackdriver integration, custom metrics, and log analysis.

- Datadog: A popular monitoring platform that supports data pipeline monitoring through integrations with various data pipeline platforms, custom metrics, log analysis, and alerting.

- New Relic: A performance monitoring platform that can be used to monitor data pipelines, offering features such as custom instrumentation, log analysis, and alerting.

In conclusion, monitoring data pipelines is essential for ensuring their reliability, performance, and data quality. By leveraging tools and techniques for metrics collection, log analysis, data validation, and alerting, you can proactively identify and resolve issues in your data pipelines before they impact downstream applications and users. By investing in data pipeline monitoring, you can improve the overall health and resilience of your data ecosystem, ensuring the delivery of accurate, timely, and valuable insights to your organization.