Best Practices for Data Pipeline Design and Optimization

Data pipelines play a crucial role in modern data-driven organizations, enabling data to flow seamlessly between various systems, applications, and storage platforms. Designing and optimizing data pipelines can be challenging, as engineers must balance factors such as scalability, performance, maintainability, and robustness. In this article, we will explore best practices for data pipeline design and optimization, covering topics such as data partitioning, schema design, caching, and monitoring.

Data Pipeline Design Principles

In order to design effective data pipelines, it's essential to follow key principles that promote scalability, maintainability, and robustness. Some of these principles include:

- Modularity: Break down complex data pipelines into smaller, reusable components that can be easily maintained, tested, and updated.

- Versioning: Use a version control system (e.g., Git) to track changes to your data pipelines, enabling collaboration and making it easier to rollback to previous versions in case of issues.

- Incremental processing: Whenever possible, process data incrementally rather than in batch, to minimize resource usage and reduce the impact of failures.

- Parallelism: Leverage parallel processing to increase the throughput and efficiency of your data pipelines.

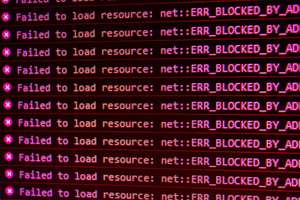

- Error handling and retries: Implement robust error handling and retries to ensure data pipeline resilience and minimize the impact of transient failures.

- Monitoring and alerting: Monitor the health and performance of your data pipelines, and set up alerts to notify you of potential issues.

Moreover, Data partitioning and sharding are essential techniques for optimizing the performance and scalability of data pipelines. By distributing data across multiple storage systems, partitions, or shards, you can:

- Balance the load on your storage systems, reducing the likelihood of bottlenecks and improving query performance.

- Isolate failures to a single partition or shard, increasing the overall fault tolerance and resilience of your data pipelines.

- Scale out your storage systems horizontally, allowing you to handle increasing data volumes and processing demands.

Some best practices for data partitioning and sharding include:

- Choose a partitioning or sharding key that balances the load across your storage systems and minimizes the impact of hotspots.

- Consider time-based partitioning for time-series data, enabling you to efficiently store, query, and age-out historical data.

- Regularly monitor the distribution of data across your partitions or shards, and re-partition or re-shard as needed to maintain optimal performance.

Schema Design

Effective schema design is crucial for optimizing the performance and maintainability of your data pipelines. Some best practices for schema design include:

- Normalize your schema to reduce data redundancy and improve data integrity, making it easier to maintain and update your data.

- Denormalize your schema when necessary to optimize query performance and reduce the complexity of your data pipelines.

- Use appropriate data types and constraints to ensure data quality and minimize storage requirements.

- Index your data judiciously to improve query performance, while being mindful of the trade-offs, such as increased storage requirements and update overheads.

- Evolve your schema over time to accommodate new data sources, requirements, and use cases.

Caching

Caching is a powerful technique for optimizing the performance of data pipelines by storing the results of expensive operations and reusing them for subsequent requests. Some best practices for caching include:

- Identify the most expensive operations in your data pipelines, such as database queries, API calls, or complex calculations, and target them for caching.

- Choose an appropriate caching strategy, such as in-memory caching, distributed caching, or materialized views, depending on your requirements and constraints.

- Set appropriate cache expiration policies to ensure data freshness and prevent stale data from being served to your users.

- Monitor cache hit rates and adjust your caching strategy as needed to maximize the benefits of caching while minimizing resource usage.

- Use cache versioning to prevent cache pollution and ensure that cache entries are invalidated when the underlying data changes.

Data Pipeline Optimization Techniques

Optimizing data pipelines involves identifying bottlenecks and addressing them using various techniques, such as:

- Batch processing: Group multiple operations together to reduce overhead and improve performance. This is particularly effective for write-heavy workloads, where batching can help reduce the impact of network latency and resource contention.

- Compression: Use data compression to reduce storage requirements and network bandwidth consumption. Compression can be particularly effective for large datasets and when transferring data between systems.

- Parallelism: Leverage parallel processing to improve the throughput and efficiency of your data pipelines. This can be achieved using techniques such as multithreading, multiprocessing, or distributed computing frameworks like Apache Spark or Hadoop.

- Data pruning: Remove unnecessary data from your pipelines to reduce storage requirements and processing overhead. This can be achieved through techniques like data filtering, aggregation, or summarization.

- Monitoring and Alerting

Monitoring and alerting are essential for maintaining the health and performance of your data pipelines. Some best practices for monitoring and alerting include:

- Collect and analyze metrics about your data pipelines, such as task execution times, success rates, resource usage, and data volumes.

- Set up alerts to notify you of potential issues, such as slow-running tasks, resource contention, or data quality problems.

- Use monitoring tools and dashboards to visualize the state of your data pipelines, making it easier to identify and troubleshoot issues.

- Conduct regular performance audits to identify bottlenecks and opportunities for optimization.

Testing and Validation

Testing and validation are critical for ensuring the reliability and accuracy of your data pipelines. Some best practices for testing and validation include:

- Implement unit tests for your data pipeline components, ensuring that they function correctly in isolation.

- Conduct integration tests to validate that your data pipeline components work together as expected.

- Use data validation techniques, such as schema validation, range checks, and consistency checks, to ensure data quality and prevent data corruption.

- Automate your testing and validation processes using continuous integration and continuous deployment (CI/CD) tools, ensuring that your data pipelines are tested and validated as part of your development workflow.

Documentation and Knowledge Sharing

Documentation and knowledge sharing are essential for maintaining and improving your data pipelines over time. Some best practices for documentation and knowledge sharing include:

- Document your data pipeline components, including their purpose, inputs, outputs, and any dependencies or assumptions.

- Use descriptive naming conventions for your data pipeline components, making it easier for others to understand their purpose and function.

- Share your knowledge and expertise with your team and organization, encouraging collaboration and continuous improvement.

In summary, designing and optimizing data pipelines is a challenging yet essential task for data engineers. By following best practices for data partitioning, schema design, caching, monitoring, and other aspects of data pipeline design, you can build scalable, maintainable, and robust data pipelines that meet the needs of your organization. As you gain experience and expertise in data pipeline design and optimization, you'll be better equipped to tackle the complex challenges of modern data engineering and deliver valuable insights to your organization.